Inserted and deleted tables are 2 pseudo tables preseting the before and after images of modified rows. They can only be accessed from within triggers. Those two have been around for quite long time but it’s hard to find any articles to tell how they are populated. Some rumors said they are retrieved from transaction logs in SQL Server 2000. Recently, I was asked to evaluate the performance impact of using trigger for my customer’s project. I did some investigation and got some interesting results.

The first, let’s create a table with some data in an user database.

create table test

(

ID int identity(1,1) primary key,

Data char(10) not null default(replicate('x', 10)),

Data1 char(10) not null default(replicate('y', 10)),

Data2 char(10) not null default(replicate('z', 10))

)

go

insert into test(Data) default values

go 10

10 records now are in my test table. Now let’s create a trigger on this table

create trigger T_Test on test for insert, delete, update as begin return end go

Note that I don’t have any code doing anything within the trigger. The next, we will need to setup SQL Server Profiler to capture Lock:Acquired and Lock:Released. Those two will tell you what locks are granted and released on the resources (rows, pages, extents, etc.). When an X lock applied to a page, you could make an assumption that the page is possibly modified internally by SQL Server.

After the profiler is setup and running, let’s run code below.

begin transaction

update test set data = replicate('a', 10)

rollback

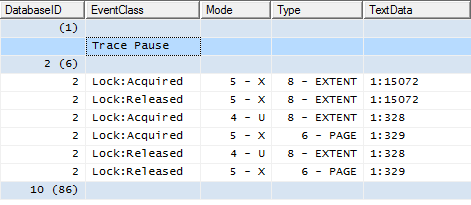

Here are what in the trace: Extent 1:15072, 1:328, and 1:329 in tempdb are “touched” by SQL Server.

Use DBCC Page command to check the content of those pages in tempdb. Page 328 and 329 are IAM pages. The result of page 15072 is very interesting.

Record Type = INDEX_RECORD Record Attributes = VARIABLE_COLUMNS Record Size = 102 Memory Dump @0x0000000016EDA6C0 0000000000000000: 26010066 000000c0 8b000000 00110000 00000000 &..f...À?........... 0000000000000014: 000a0000 00000000 0000002f 00000000 01260000 .........../.....&.. 0000000000000028: 00000000 007f01e0 3a000001 000e0038 00000000 .......à:......8.... 000000000000003C: 00100026 00090000 00616161 61616161 61616179 ...&. ...aaaaaaaaaay 0000000000000050: 79797979 79797979 797a7a7a 7a7a7a7a 7a7a7a04 yyyyyyyyyzzzzzzzzzz. 0000000000000064: 0000 .. Slot 17, Offset 0x726, Length 102, DumpStyle BYTE Record Type = INDEX_RECORD Record Attributes = VARIABLE_COLUMNS Record Size = 102 Memory Dump @0x0000000016EDA726 0000000000000000: 26010066 000000c0 8b000000 00120000 00000000 &..f...À?........... 0000000000000014: 000a0000 00000000 0000002f 00000000 012600c0 .........../.....&.À 0000000000000028: 8b000000 001100e0 3a000001 000f0000 00000000 ?......à:........... 000000000000003C: 00100026 00090000 00787878 78787878 78787879 ...&. ...xxxxxxxxxxy 0000000000000050: 79797979 79797979 797a7a7a 7a7a7a7a 7a7a7a04 yyyyyyyyyzzzzzzzzzz. 0000000000000064: 0000 .. Slot 18, Offset 0x78c, Length 102, DumpStyle BYTE Record Type = INDEX_RECORD Record Attributes = VARIABLE_COLUMNS Record Size = 102 Memory Dump @0x0000000016EDA78C 0000000000000000: 26010066 000000c0 8b000000 00130000 00000000 &..f...À?........... 0000000000000014: 000a0000 00000000 0000002f 00000000 01260000 .........../.....&.. 0000000000000028: 00000000 00a801e0 3a000001 00100038 00000000 .....¨.à:......8.... 000000000000003C: 00100026 000a0000 00616161 61616161 61616179 ...&.....aaaaaaaaaay 0000000000000050: 79797979 79797979 797a7a7a 7a7a7a7a 7a7a7a04 yyyyyyyyyzzzzzzzzzz. 0000000000000064: 0000 .. Slot 19, Offset 0x7f2, Length 102, DumpStyle BYTE Record Type = INDEX_RECORD Record Attributes = VARIABLE_COLUMNS Record Size = 102 Memory Dump @0x0000000016EDA7F2 0000000000000000: 26010066 000000c0 8b000000 00140000 00000000 &..f...À?........... 0000000000000014: 000a0000 00000000 0000002f 00000000 012600c0 .........../.....&.À 0000000000000028: 8b000000 001300e0 3a000001 00110000 00000000 ?......à:........... 000000000000003C: 00100026 000a0000 00787878 78787878 78787879 ...&.....xxxxxxxxxxy 0000000000000050: 79797979 79797979 797a7a7a 7a7a7a7a 7a7a7a04 yyyyyyyyyzzzzzzzzzz. 0000000000000064: 0000 .. OFFSET TABLE: Row - Offset 19 (0x13) - 2034 (0x7f2) 18 (0x12) - 1932 (0x78c) 17 (0x11) - 1830 (0x726) 16 (0x10) - 1728 (0x6c0) 15 (0xf) - 1626 (0x65a) 14 (0xe) - 1524 (0x5f4) 13 (0xd) - 1422 (0x58e) 12 (0xc) - 1320 (0x528) 11 (0xb) - 1218 (0x4c2) 10 (0xa) - 1116 (0x45c) 9 (0x9) - 1014 (0x3f6) 8 (0x8) - 912 (0x390) 7 (0x7) - 810 (0x32a) 6 (0x6) - 708 (0x2c4) 5 (0x5) - 606 (0x25e) 4 (0x4) - 504 (0x1f8) 3 (0x3) - 402 (0x192) 2 (0x2) - 300 (0x12c) 1 (0x1) - 198 (0xc6) 0 (0x0) - 96 (0x60)

20 records are in the page. Em… I wouldn’t be surprised since we should have 10 records in inserted table and 10 in the deleted table in this case. Surprisingly, Deleted and inserted tables are just in one physical storage structure in tempdb and records for inserted and deleted are interlaced! (It’s impossible for SQL Server to use one data page to host 2 physical tables.) So, inserted and deleted are 2 logical tables where the data is derived from one physical table.

The size of the record is 102. I cannot wait to dump the records in the test table by DBCC Page. (To save some words, I don’t post the result of the DBCC command here) The size of the record in test table is 41. The record in tempdb includes all bits in the records in the table in test table. 61 bytes of overhead are added for each row.

I wonder what would happen if the size of the record in my table is the maximum record size – 8060? Will the trigger stop working?

drop table test

go

create table test

(

ID int identity(1,1) primary key,

Data char(10) not null default(replicate('x', 10)),

Data1 char(10) not null default(replicate('y', 10)),

Data2 char(10) not null default(replicate('z', 10)) ,

Data3 char(8000) not null default(replicate('O', 8000)),

Data4 char(19) not null default(replicate('P', 19)),

)

go

insert into test(Data) default values

go 10

go

create trigger T_Test on test

for insert, delete, update

as

begin

return

end

go

begin transaction

update test set data = replicate('a', 10)

rollback

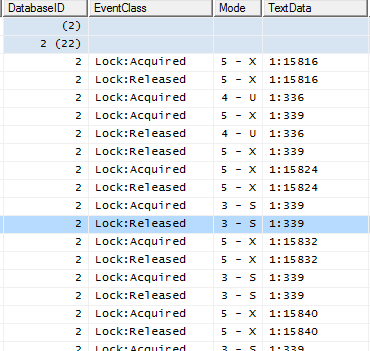

Trigger’s working. From SQL Profiler, what I got is

From here, what I could see is that while inserted and deleted are populated, records are not written to tempdb in row by row mode. It’s inserted extent by extent mode. I dumped page 1586 in tempdb

PAGE: (1:15816) .... 0000000000000000: 26010079 00020053 8c000000 00010000 00000000 &..y...S?........... 0000000000000014: 000a0000 00000000 0000003d 00000000 01791f28 ...........=.....y.( 0000000000000028: e6020000 00009900 00000000 00000057 f5fe0700 æ..............Wõþ.. 000000000000003C: 004f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f .OOOOOOOOOOOOOOOOOOO 0000000000000050: 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f50 OOOOOOOOOOOOOOOOOOOP 0000000000000064: 50505050 50505050 50505050 50505050 50500600 PPPPPPPPPPPPPPPPPP.. 0000000000000078: 00

Obviously it’s the last part of my record. 61 bytes of over head is still there. In page 1587

PAGE: (1:15817) ... Record Type = INDEX_RECORD Record Attributes = VARIABLE_COLUMNS Record Size = 8061 Memory Dump @0x00000000161DA060 0000000000000000: 2601007d 1f010c53 8c000000 00010000 00000000 &..}...S?........... 0000000000000014: 000a0000 00000000 0000003d 00000000 01791fc8 ...........=.....y.È 0000000000000028: 3d000001 00000000 00000000 00000000 00000000 =................... 000000000000003C: 00100079 1f010000 00787878 78787878 78787879 ...y.....xxxxxxxxxxy 0000000000000050: 79797979 79797979 797a7a7a 7a7a7a7a 7a7a7a4f yyyyyyyyyzzzzzzzzzzO 0000000000000064: 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f OOOOOOOOOOOOOOOOOOOO 0000000000000078: 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f OOOOOOOOOOOOOOOOOOOO 000000000000008C: 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f 4f4f4f4f OOOOOOOOOOOOOOOOOOOO ...

This is a record in deleted table. Size of the record is 8061. 61 bytes of overhead is added as well. Wait, it’s not just 61 * 2 bytes of overhead…In this case, SQL Server actually adds one page overhead for one inserted or deleted record in tempdb, which means you need to spend 2 pages for one record if your base table record is big.

So, the overhead of using triggers for tempdb is significant. If you could get around it, try to do so. If triggers must exist, optimize your tempdb. Keeping mind that, there are 61 bytes of overhead added in each of the record in inserted and deleted table. If your record size is big – close to 8060, you may get 1 page overhead for each of the record in tempdb. Records of those 2 pseudo tables in tempdb are in one HEAP, they are not force ordered even you have clustered primary key and none clustered indexes in the base table. Full scan of both pseudo tables is needed even you just access one of them(except you access them through EXISTS). If complex joinings with pseudo tables must exist in the trigger(s), you might need to consider number of records in those 2 tables – number of records are modified in the base table. Bigger set will give more performance issue in the trigger…

It sounds pretty negative. But it does not mean you should not use triggers. In most of the OLTP system, data modification is not as significant as data reading. Sometime, when the size of the record is small and changed records are just few, SQL Server does not spill the data to tempdb. (At least I saw the cases that locks are not granted to any pages in tempdb).

The most important thing is you know what’s behind…

What about the INSTEAD OF triggers? It behaves slightly different than AFTER triggers. You can use the same way to figure it out.

I had a lot of fun on this topic with my coworker who worked with me on this research and provided valuable suggestions and comments.

This is brought by John Huang, http://www.sqlnotes.info.

Hi John,

It’s documented (http://msdn.microsoft.com/en-us/library/ms175492(SQL.105).aspx) that triggers use the version store in tempdb (append-only storage units and so on) that’s all you’re seeing here, isn’t it?

It mentioned that triggers use tempdb for inserted and deleted tables. But it did not say how they are stored, overhead, etc.

I was surprised by the statement: “Inserted and deleted tables are 2 pseudo tables preseting the before and after images of modified rows. They can only be accessed from within triggers.” as I have always assumed the OUTPUT column list clause on MERGE, INSERT, UPDATE and DELETE (e.g. DELETED.* and INSERTED.*) were sourced in the same way as a trigger returns those values.

I wonder what would happen if the size of the record in my table is the maximum record size – 8060? Will the trigger stop working?

Back when sql 2000 was the standard, the maximum size of a row was 8060; We had a table which if you summed the maximum sizes of all the columns together was larger than that, but none of our clients ever used enough of the space in all the various columns to exceed the limit! Anyway, we had a trigger on the table using the inserted/deleted tables, and never had any problem with it.

Given that in sql 2005 a row can break a page, and in 2008 a column can break a page, I would expect there is capability available which will inserted and deleted tables to function in like manner in these and later versions of sql.

Nope, it will use 2 pages