People are confused about the differences amoung user tables, temp tables, and table variables in terms of inserting performance. I did a test today on my laptop – Core I7 Q720, 8GB RAM, SSD. I have both tempdb and user db on the same hard drive. I tested following scenarios over permanent tables, permanent tables in tempdb, global temp tables, local temp tables and table variables.

- Heap: inserting records to a heap one by one, each insert is an auto commit operation

- Primary Key: clustered primary key over an identity column. each insert is an auto commit operation

- Heap – Transaction: all insertions are in one transaction for each batch, table is a heap

- PK – Transaction: all insertions are in one transaction for each batch, table has clustered primary key on an identity column.

- Heap BulkLoad: Bulk load data to a heap

- PK BulkLoad: Bulk load data to a table with clustered index on an identity column

In the test, I insert 50k records and measure the time spent, remove the object, recreate the object, then insert 50k records again. I measure 10 times after running them 10 times. This ensures the data is in the cache for the bulk copy and the database files have been growned and have enough room for the test. Table structure is below

create table PerfTest(ID int identity(1,1) , text char(1000) not null default(replicate('a', 1000)))

create table tempdb..PerfTest(ID int identity(1,1) , text char(1000) not null default(replicate('a', 1000)))

create table ##PerfTest(ID int identity(1,1) , text char(1000) not null default(replicate('a', 1000)))

create table #PerfTest(ID int identity(1,1) , text char(1000) not null default(replicate('a', 1000)))

declare @PerfTest table (ID int identity(1,1) , text char(1000) not null default(replicate('a', 1000)))

---for one by one insert, I use following script

----begin transaction

select @Max = 50000

select @i = 0, @TimeFrom = SYSDATETIME()

while @i < @Max

begin

insert into PerfTest default values

select @i = @i + 1

end

select @TimeTo = SYSDATETIME()

--save the time differences

--commit

-- For bulk insert, I use following script

select @TimeFrom = SYSDATETIME()

insert into PerfTest(text) select text from mtest.dbo.data

select @TimeTo = SYSDATETIME()

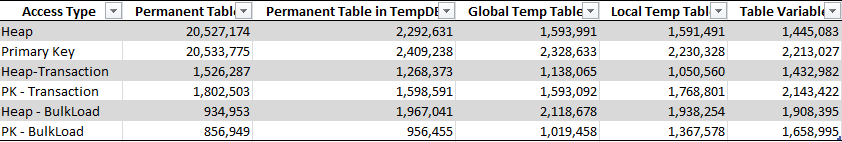

Now you may want to know the final result immediately rather than going through results for each test section and then come to the final. here is the result. Unit of Measure is MicroSecond.

Here is the supporting data. each of the numbers below is an average of results from 10 consecutive execution using same parameters. For better formatting, I screenshot it below. If you want to see the full data sheet, please download it here.

Few things I think I can conclude below from the tests

- Inserting records in the object in tempdb is usually faster than doing same thing in user DB. TempDB is optimized for data modification. Since tempdb is re-created when service restarts, it does not need to undergo crash-recovery process, hence, it does not need to log everything for this purpose. But it does need transaction logs for rollback.

- Permenant table in tempdb like a temp table in terms of inserting speed.

- If you need to insert many records into a table, you’re better off inserting them in one big transaction or bulk loading. It’s 10 times faster than committing records one by one. This is always a tread-off in term of performance under high concurrency scenarios. If you use one transaction for all 50K insertion, you save time on data mdification, however, you are taking more locks from the system, having long run transactions, and possibly you might block others.

- Table variables is not transactional. The inserting speed is not affected too much by applying transactions at different level. It seems perform less effective than regular temp tables and tables in tempdb when there are indexes on it. It does not work well with bulk insert.

- Bulk insert performs well with tables in user database, tables in tempdb, and global temp tables. However, it does not work well with local temp objects.

- Table with clustered primary key does have impact on data insertion. But for the bulk load, having ever-increase primary key is faster than heap!

- User tables in the user database does not always slower than objects in tempdb.

I believe you will conclude more than I do from the spreadsheet here. Let me know your ideas. Have a wonderful weekend.

nice article.. Thanks for sharing

Thank you very much. I’ve been looking for this for ages.

Table variable, #temp tables will snag when volume of data increases. We tested #temp, table variables in our environment with a data volume of 600 million, Permanent temporary table created in user databases will give outstanding performance. CTE, table variables, #temp tables are suitable only for small volume of data. I will always recommend to use tables. But the conclusion is all have its own pros and cons. It depends and varies on requirements.

From searching perspective, there is no differences between # temp tables(if you have proper indexes on it) and tables in regular user database.

thank you!!!

Thanks, John. It is objective tests like this that help to dispel tribal knowledge that is very often just wrong.